Title: Introduction

Speaker: C. Ebenbauer, D. Gramlich, C. Scherer

Abstract: We provide an introduction and an overview about various research activities centered around the theme of the workshop.

Brief Bio(s): Christian Ebenbauer received his MS (Dipl.-Ing.) in Telematics (Computer Science and Electrical Engineering) from Graz University of Technology, Austria, in 2000

and his PhD (Dr.-Ing.) in Mechanical Engineering from the University of Stuttgart, Germany, in 2005. After having completed his PhD, he was a Postdoctoral Associate and an Erwin Schreodinger

Fellow at the Laboratory for Information and Decision Systems, Massachusetts Institute of Technology, USA. From April 2009 until July 2021, he was a full professor at the Institute for Systems Theory and Automatic Control, University of Stuttgart, Germany.

Since August 2021 he holds the Chair of Intelligent Control Systems at RWTH Aachen University, Germany.

His research interests lie in the areas of dynamical systems, control theory, optimization and computation. Recent research projects focus on system-theoretic approaches to optimization algorithms, extremum seeking, and receding horizon decision making.

Dennis Gramlich is a PhD student at the Chair of Intelligent Control Systems at RWTH Aachen University, Germany, under the supervision of Christian Ebenbauer. He studied Mathematics and Engineering Cybernetics at the University of Stuttgart, Germany, and specialised on the fields of Control Theory, Scientific Computing and Learning Theory. He is working at the intersection of the fields of Control Theory, Optimisation and Machine Learning with a recent focus on the analysis and design of optimisation algorithms using tools from control theory.

Carsten Scherer received his Ph.D. degree in mathematics from the University of Würzburg (Germany) in 1991. In 1993, he joined Delft University of Technology (The Netherlands) where he held positions as an assistant and associate professor. From December 2001 until February 2010 he was a full professor within the Delft Center for Systems and Control at Delft University of Technology. Since March 2010 he holds the SimTech Chair for Mathematical Systems Theory in the Department of Mathematics at the University of Stuttgart (Germany). Dr. Scherer acted as the chair of the IFAC technical committee on Robust Control (2002-2008), and he has served as an associated editor for the IEEE Transactions on Automatic Control, Automatica, Systems and Control Letters and the European Journal of Control. Since 2013 he is an IEEE fellow “for contributions to optimization-based robust controller synthesis“. Dr. Scherer’s main research activities cover various topics in applying optimization techniques for developing new advanced controller design algorithms and their application to mechatronics and aerospace systems.

Title: A principled and automated tight analysis of first order optimization methods

Speaker: Julien Hendrikx, Francois Glineur, Adrien Taylor

Abstract: First-order optimization methods are very often characterized in terms of their worst-case performances, as many other classes of algorithms. From a practical point of view, performance guarantees are mathematical proofs which mainly rely on precise combinations of inequalities derived from assumptions on the functions and methods. This often leads to potentially long, technical and not necessarily intuitive proofs, that can be hard to analyze for those who were not involved in their development. Moreover, proofs found in the literature very frequently produce worst-case bounds that are not as tight as possible, leading to conservatism, and making performance comparisons and algorithmic tuning more challenging In this presentation, we show how and why most convergence proofs of first order optimization algorithms are actually very similar in nature, by relating them to the essence of worst-case analysis: computing worst-case scenarios. We then show how this point of view offers a principled way to approach and produce worst-case analyses in the context of first-order methods, which can in many cases be automated. This approach led to exact worst-case bounds for a multitude of first-order methods, together with examples of worst-case instances, and new optimal algorithmic parameters. The presentation will be example-based, featuring methods such as the gradient method and its accelerated variants, projected gradient methods, distributed methods or Douglas-Rachford, or methods involving inexact gradients. All the results that will be presented can be reproduced numerically using the Performance Estimation Toolbox (PESTO). https://github.com/AdrienTaylor/Performance-Estimation-Toolbox

Brief Bio(s): François Glineur received dual engineering degrees from Université de Mons and CentraleSupélec in 1997, and a PhD in Applied Sciences from Université de Mons in 2001. He visited Delft University of Technology and McMaster University as a postdoctoral researcher, then joined Université catholique de Louvain where he is currently a full professor of applied mathematics at the Engineering School, member of the Center for Operations Research and Econometrics and the Institute of Information and Communication Technologies, Electronics and Applied Mathematics. He was vice-dean of the Engineering School between 2016 and 2020. He has supervised ten doctoral students and authored more than sixty publications on optimization models and methods (with a focus on convex optimization and algorithmic efficiency) and their engineering applications, as well as on nonnegative matrix factorization and applications to data analysis.

Julien M. Hendrickx is professor of mathematical engineering at UCLouvain, in the Ecole Polytechnique de Louvain since 2010. He obtained an engineering degree in applied mathematics (2004) and a PhD in mathematical engineering (2008) from the same university. He has been a visiting researcher at the University of Illinois at Urbana Champaign in 2003-2004, at the National ICT Australia in 2005 and 2006, and at the Massachusetts Institute of Technology in 2006 and 2008. He was a postdoctoral fellow at the Laboratory for Information and Decision Systems of the Massachusetts Institute of Technology 2009 and 2010, holding postdoctoral fellowships of the F.R.S.-FNRS (Fund for Scientific Research) and of Belgian American Education Foundation. He was also resident scholar at the Center for Information and Systems Engineering (Boston University) in 2018-2019, holding a WBI.World excellence fellowship. Doctor Hendrickx is the recipient of the 2008 EECI award for the best PhD thesis in Europe in the field of Embedded and Networked Control, and of the Alcatel-Lucent-Bell 2009 award for a PhD thesis on original new concepts or application in the domain of information or communication technologies.

Adrien Taylor is a researcher at Inria and Ecole Normale Supérieure in Paris. He obtained his PhD from Université catholique de Louvain under the supervision of Julien Hendrickx and François Glineur. His research interests include optimization and machine learning, with a particular emphasis on semidefinite programming and first-order methods. Adrien Taylor was a finalist of the triennal Tucker Prize and is recipient of the 2018 IBM-FNRS innovation award and the 2018 ICTEAM thesis award.

Title: Enabling and accelerating nested multi-level algorithms via predictive sensitivity

Speaker: Florian Dörfler

Abstract: Many widely used optimization algorithms present nested levels, where iterations for the lower levels need to converge before proceeding to the next upper level iteration, for example, dual ascent, interior point methods, bilevel gradient descent, etc. Such multi-level algorithms can be represented as control systems with multiple time scales. A classical approach for multiple time-scale systems is to design simple controllers within each time scale, then connect them through singular perturbation terms, and finally certify stability of the interconnected system for a sufficiently large time-scale separation via singular perturbation analysis. However, such a time scale separation interconnection slows down the convergence of the interconnected system. Instead, the recently proposed predictive-sensitivity approach allows to connect time-scale separated systems in a single time scale, and preserve their stability, without requiring any time-scale separation. For that, the predictive-sensitivity approach uses a feed-forward term to modify the dynamics of faster systems in order to anticipate the dynamics of slower ones. In this workshop, we analyze how to enable and accelerate nested multi-level algorithms via the predictive-sensitivity approach. We illustrate the utility of our approach with numerical case studies.

Brief Bio: Florian Dörfler is an Associate Professor at the Automatic Control Laboratory at ETH Zürich and the Associate Head of the Department of Information Technology and Electrical Engineering. He received his Ph.D. degree in Mechanical Engineering from the University of California at Santa Barbara in 2013, and a Diplom degree in Engineering Cybernetics from the University of Stuttgart in 2008. From 2013 to 2014 he was an Assistant Professor at the University of California Los Angeles. His primary research interests are centered around control, optimization, and system theory with applications in network systems, in particular electric power grids. He is a recipient of the distinguished young research awards by IFAC (Manfred Thoma Medal 2020) and EUCA (European Control Award 2020). His students were winners or finalists for Best Student Paper awards at the European Control Conference (2013, 2019), the American Control Conference (2016), the Conference on Decision and Control (2020), the PES General Meeting (2020), and the PES PowerTech Conference (2017). He is furthermore a recipient of the 2010 ACC Student Best Paper Award, the 2011 O. Hugo Schuck Best Paper Award, the 2012-2014 Automatica Best Paper Award, the 2016 IEEE Circuits and Systems Guillemin-Cauer Best Paper Award, and the 2015 UCSB ME Best PhD award.

Title: Optimization Algorithms as Robust Feedback Controllers

Speaker: Saverio Bolognani

Abstract: We consider the problem of driving a stable system to an operating point that minimizes a given cost, and tracking such an optimal point in the presence of exogenous disturbances. This class of problem arises naturally in many engineering applications, e.g. in the real-time operation of electric power systems. We will show that this task can be achieved by interpreting and using iterative optimization algorithms as robust output-feedback controllers. Special care is required in order to satisfy input and output constraints at all times and to maintain stability of the closed-loop system. We will see how both these properties can be guaranteed at the controller design stage.

Brief Bio: Saverio Bolognani received the B.S. degree in Information Engineering, the M.S. degree in Automation Engineering, and the Ph.D. degree in Information Engineering from the University of Padova, Italy, in 2005, 2007, and 2011, respectively. In 2006-2007, he was a visiting graduate student at the University of California at San Diego. In 2013-2014 he was a Postdoctoral Associate at the Laboratory for Information and Decision Systems of the Massachusetts Institute of Technology in Cambridge (MA). He is currently a Senior Researcher at the Automatic Control Laboratory at ETH Zurich. His research interests include the application of networked control system theory to power systems, distributed control and optimization, and cyber-physical systems.

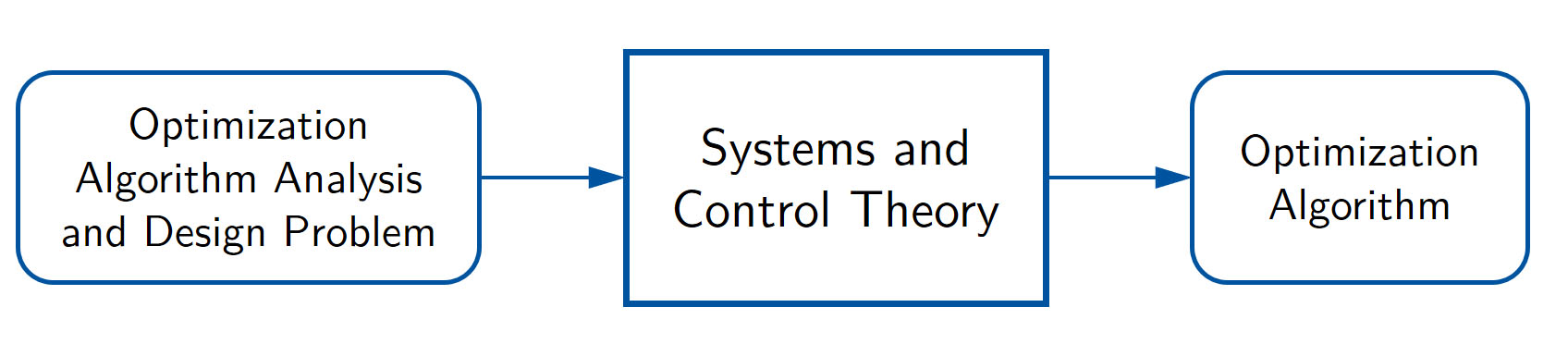

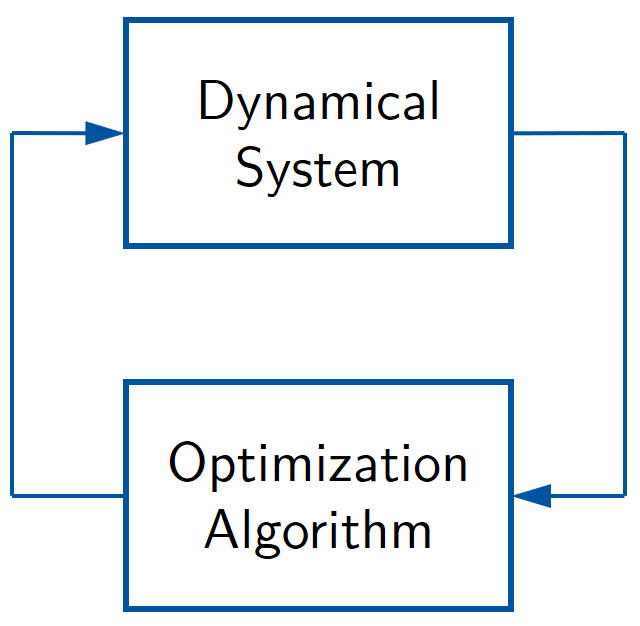

are motivated by the basic fact that many optimization algorithms are dynamical systems, where tools from control theory can be used to analyze and synthesize algorithms. A further motivation is the increasing use of

online optimization in feedback algorithms due to the high availability of online computing power in all kinds

of systems. Prominent examples of such algorithms are receding horizon schemes or extremum control. It can be

expected that artificial intelligence techniques and learning algorithms based on large data sets will further

accelerate this development.

are motivated by the basic fact that many optimization algorithms are dynamical systems, where tools from control theory can be used to analyze and synthesize algorithms. A further motivation is the increasing use of

online optimization in feedback algorithms due to the high availability of online computing power in all kinds

of systems. Prominent examples of such algorithms are receding horizon schemes or extremum control. It can be

expected that artificial intelligence techniques and learning algorithms based on large data sets will further

accelerate this development.